The Feature Code Generation Prompt

A Systemic Approach to Reproducible GenAI Coding

The Hidden Cost of Vibe Coding

Software teams adopting GenAI-assisted coding face a structural knowledge-transfer problem, not a tooling one. Developers must hand-craft elaborate prompts just to make LLMs produce code that aligns with their internal standards, architectures, and design intentions. Each prompt becomes a manual translation of tacit team knowledge — i.e., naming conventions, architectural assumptions, and quality expectations that the model cannot infer on its own.

This gap gives rise to vibe coding: an improvisational, intuition-driven style of programming where developers steer the model through natural-language hints rather than systematic specification. It feels fluid and creative, but beneath the surface it amplifies information loss. Because the model lacks the shared mental models that bind real teams together, every request must restate context that should already be common ground. Developers repeat themselves, re-explain intent, and reconcile inconsistent outputs from a system that never truly “remembers” the organization’s logic.

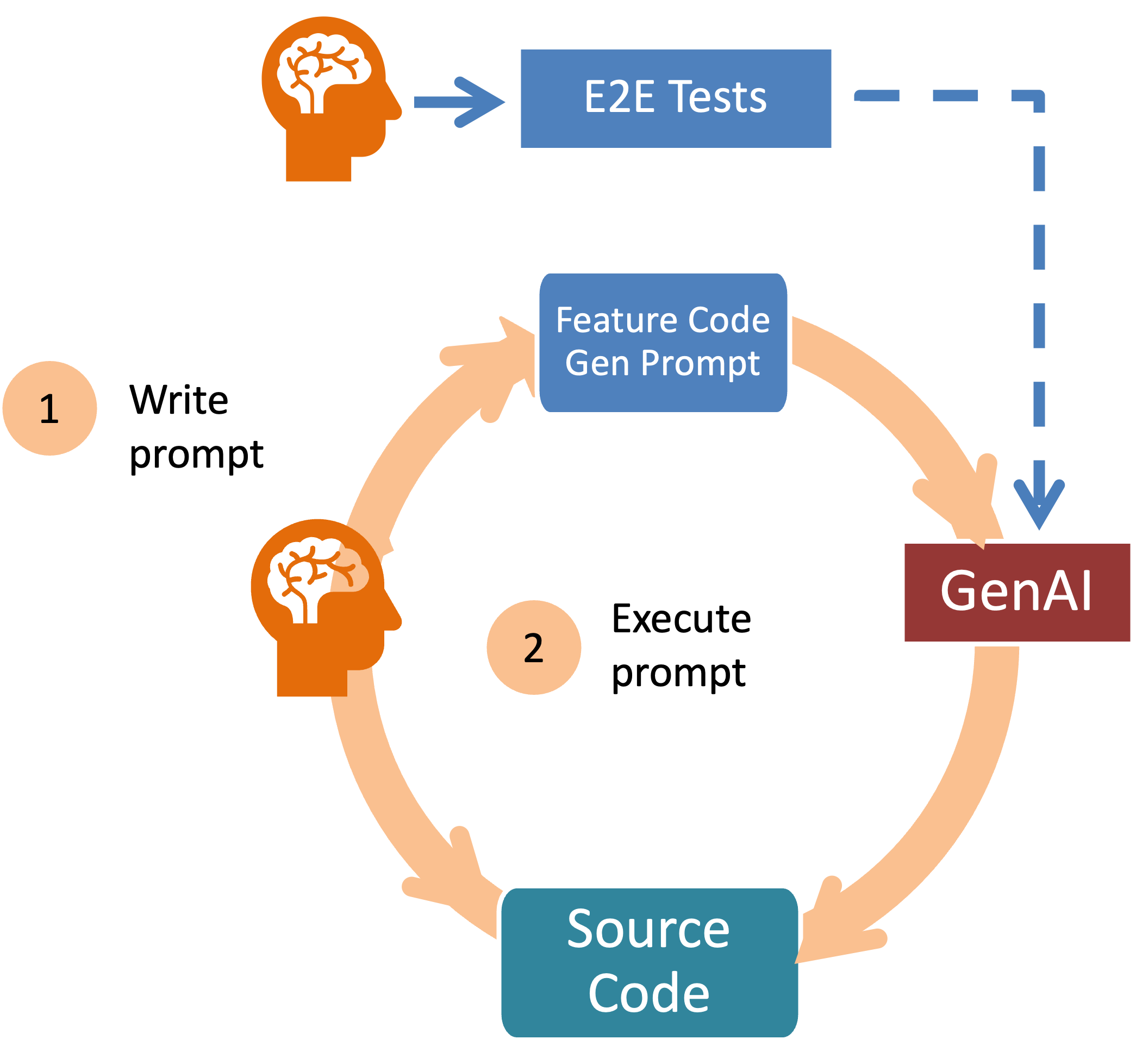

Some developers attempt a more disciplined “test-driven vibe coding.” They include end-to-end test cases directly in their prompts so that generated code at least satisfies an executable condition. It’s a step toward rigor, but still a patch: the underlying knowledge remains fragmented, externalized only as ad-hoc examples rather than a coherent design document. What emerges is code that passes tests but drifts from architectural intent.

In knowledge-centric terms, this is entropy in action — the steady erosion of shared understanding between human and machine. The LLM may produce syntactically correct code, yet it remains cognitively misaligned with how the team thinks and builds. That misalignment accumulates as inefficiency, rework, and silent technical debt disguised as speed.

Even in its test-driven form, vibe coding masks a deeper issue — unmanaged knowledge gaps between human reasoning and machine generation that accumulate as invisible technical debt.

When Speed Becomes Friction

The immediate consequence of unstructured GenAI usage is systemic inefficiency. Without a shared framework for translating product intent into machine-readable instructions, every developer engages in isolated experimentation. Codebases diverge in style, logic, and quality. Integration slows as teams spend cycles reconciling outputs that technically function but conceptually misalign. What feels like progress at the prompt level becomes drag at the system level.

Over time, this technical inconsistency compounds into organizational strain. Each developer must mentally reconstruct the missing context that the workflow fails to preserve. Attention shifts from solving problems to decoding the reasoning behind prior outputs. Cognitive load rises, review cycles lengthen, and the collective learning rate declines. The team’s energy once directed toward building becomes absorbed by synchronization overhead.

At scale, this erodes trust in both the tool and the process. AI assistance turns from an accelerant into a dependency that magnifies local inefficiency. Instead of amplifying collective intelligence, the system dissipates it across disconnected prompts and partial understandings.

Without structured knowledge flow, GenAI amplifies fragmentation — accelerating activity while silently degrading alignment and learning.

The Iterative Test-Driven Agentic Development system

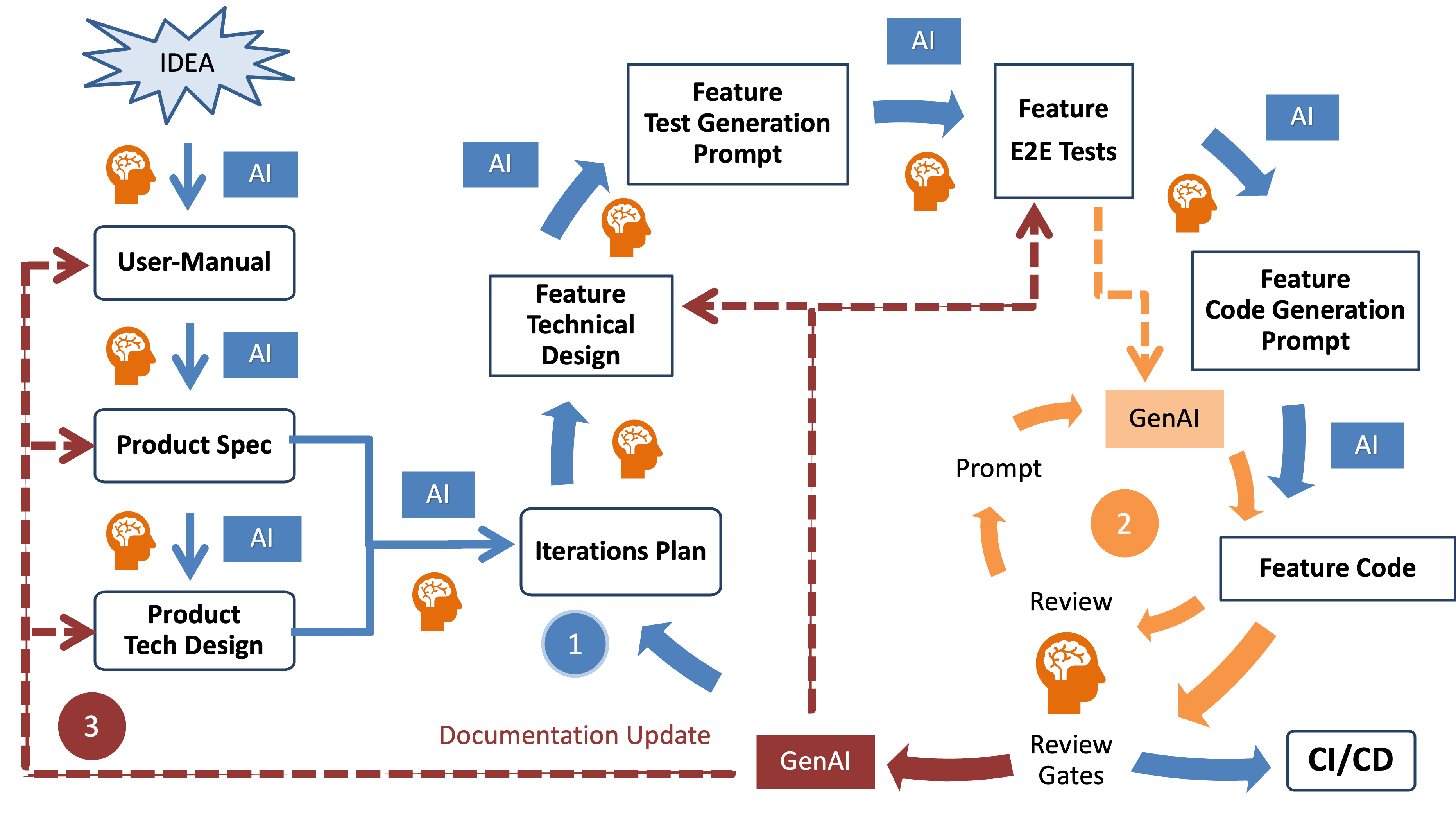

Where vibe coding improvises, the structured Iterative Test-Driven Agentic Development system compiles intent. Instead of relying on a stream of loosely related prompts, it integrates all the upstream artifacts — i.e., user documentation, behavioral specifications, architectural design, and test plans — into one coherent, machine-readable Feature Code Generation Prompt. The goal is not more prompting, but a reproducible method for transforming human understanding into executable guidance for GenAI code generation.

Each process step: User Manual → BDD Scenarios → Design → Tests contributes a distinct layer of context into the LLM. The User Manual captures intent and scope; BDD scenarios encode behavior; the Architectural and Feature Technical Designs define structure and constraints; and the Test specifications establish verifiable success conditions. Together, they converge into a single artifact: the Feature Code Generation Prompt, representing a complete specification for one user-facing functionality or feature.

This prompt encapsulates the following essential elements:

- Feature Overview

- Development Approach

- Test-Driven Development (TDD) cycle

- Implementation Plan

- Scenarios

- Architectural Approach

- File Structure

- Technical Design Details

- Tasks

- Code Examples

- Technical Requirements

- Success Criteria

In practice, this turns code generation into a structured process: developers no longer invent prompts but derive them from a shared knowledge base.

The structured Iterative Test-Driven Agentic Development replaces intuition with information. It transforms GenAI from a guessing partner into a reliable execution engine.

From Chaos to Coherence

Teams that adopt the structured Iterative Test-Driven Agentic Development evolve from fragmented improvisation to coherent knowledge flow. Each feature becomes a closed feedback loop, connecting specification, design, tests, and implementation into one traceable sequence. The output of every iteration is not just working code but an auditable chain of reasoning — a persistent record of why the code exists and how it fulfills intent. Technical consistency becomes the default outcome rather than a quality-control challenge.

Practically, this yields reproducible, high-quality AI code generation with traceable lineage from specification to implementation. Developers and AI coding agents begin to operate from the same shared understanding of the system. Reviews accelerate because reasoning is explicit; onboarding shortens because design logic is discoverable; and cross-team alignment improves because every Feature Code Generation Prompt encodes a miniature knowledge base. Culturally, it shifts teams toward knowledge-centric engineering: prompts become shared knowledge artifacts, not ad-hoc instructions.

Over time, this alignment transforms GenAI from a productivity tool into a medium of collective intelligence. The organization gains not only cleaner code but a self-reinforcing system of trust, traceability, and continuous improvement.

By structuring how knowledge flows into AI, teams turn automation into amplification thus scaling both their technical precision and their collective learning capacity.

Next Steps

The path forward is to formalize the workflow and test it on a real feature. Start by mapping your existing development artifacts such as user stories, specs, designs, and tests into the structure of Iterative Test-Driven Agentic Development. Pilot it on one self-contained functionality, observe how information flows through the process, and refine the template before scaling it across teams.

From there, codify the practice: store prompts in version control, review them alongside code, and treat them as living documentation. Over time, this discipline will make the structured prompt as natural to your developers as writing a commit message and far more valuable.

Begin by selecting a single feature to pilot the structured workflow and create its first complete Feature Code Generation Prompt — the foundation for every AI-assisted iteration to come.

Dimitar Bakardzhiev

Getting started